Content Moderation for Your UGC Campaigns: All You Need To Know

If you run a campaign about a “gift,” you may get a lot of leads from English-speaking locations, but from Germany, all you will get are reports and bans. Wondering why? 🤔

This is where content moderation sweeps in to save the day. Now that you are already convinced why your campaign requires content moderation let’s dig deeper and see what else it can do for you. 👇

The Role of Content Moderation

We all know that the Internet has no borders, and here, ideas roam free, But with this freedom comes challenges. Conversations can turn messy, and diverse views sometimes lead to confusion.

This is where content moderation steps in – like a digital guardian.

It’s not just about saying yes or no; it’s about keeping things safe, letting voices be heard, and stopping harmful effects.

Ensuring User Safety and Online Community Health:

Content moderation is essential to create a safe and welcoming environment for users and to address the potential risks posed by generative AI. By actively identifying and filtering out content generated by AI that may include harmful, misleading, or manipulated information, online platforms can mitigate the spread of misinformation and prevent potential harm to users.

This helps build users’ trust, encourage active participation, and foster a healthy brand community.

Balancing Freedom of Expression and Responsible Content Curation:

Online platforms value the principle of freedom of expression, allowing users to voice their opinions and share their thoughts. However, this freedom must be balanced with responsible content curation to maintain a respectful and inclusive environment.

Content moderation ensures that while users can express themselves, it’s done within the bounds of acceptable behavior and respectful communication.

Protecting Against Harmful, Illegal, or Inappropriate Content:

Content moderation protects against content that can cause harm, spread misinformation, or violate laws and regulations. It prevents the dissemination of illegal materials, such as copyrighted content, pirated software, and prohibited substances.

Additionally, it helps safeguard users from exposure to explicit or graphic content that might be inappropriate for specific audiences.

Effective content moderation involves a combination of human moderators and automated systems working together to strike the right balance between these three roles. It’s a delicate task that requires careful consideration of context, cultural norms, and the evolving nature of online interactions.

Types of Content Moderation

Okay, let’s get into the gears of content moderation! Think of it as a multi-layered process, like a security system for your favorite online hangouts. Here are the four primary flavors of content moderation:

Pre-moderation: Reviewing Content Before It’s Published

Just like a bouncer checking IDs at a club entrance, pre-moderation is about scanning content before it hits the spotlight.

This way, the not-so-cool stuff never even sees the light of day. It’s like a virtual velvet rope for your online spaces.

Post-moderation: Monitoring and Removing Content After Publication

Post-moderation is like cleaning up after a big party. Content gets published first, and then the moderation crew swoops in to see if anything needs tidying up.

If something’s off, it’s swiftly taken down. It’s like having an eagle-eyed janitor for the online world.

Reactive Moderation: Responding to User Reports and Feedback

Imagine you’re at a picnic, and someone spots a wasp nest. You’d tell the organizer, right? Similarly, reactive moderation depends on users sounding the alarm when they see problematic content. The moderation team then takes action, much like getting rid of those pesky wasps.

Proactive Moderation: Using AI and Algorithms to Detect Problematic Content

Here’s where the tech wizards step in. AI and smart algorithms scan through heaps of content, looking for red flags.

They’re like virtual bloodhounds sniffing out anything that doesn’t belong. It’s like having super-powered glasses that can spot trouble from a mile away.

So, as we dig deeper into content moderation, keep these flavors in mind. They’re the secret sauce that keeps the online party rocking while keeping the trolls and troublemakers at bay.

Challenges in Content Moderation

Let’s talk challenges – the hurdles that content moderation teams tackle like digital superheroes. Imagine trying to keep a massive concert crowd in check – it’s a bit like that, just in the online world.

Here are the four biggies:

Scale: Dealing with Massive Amounts of User-Generated Content

Think about how much stuff people share online every second. Now imagine sifting through all of it to spot any unruly behavior. That’s the scale challenge.

Content moderation teams must handle a never-ending stream of posts, comments, videos, and more. On this premise, using AI could be beneficial to support human editors. There are multiple generative AI examples of moderating posts, threads, and even images on scale.

Subjectivity: Navigating Cultural Differences and Diverse Perspectives

Ever had a friendly debate where you realized someone else saw things totally differently? Now picture that on a global scale.

Content can be interpreted in many ways, depending on culture, background, and personal views. Moderators need to walk a tightrope, ensuring that the same rules apply fairly to everyone, no matter where they’re from.

Context: Understanding the Intent Behind Content for Accurate Moderation

Words can be tricky. “Cool” can mean excellent or chilly, depending on context. Similarly, understanding if a post is meant to be funny, informative, or offensive can be tough.

Content moderation teams play detective, trying to figure out what’s meant as harmless banter and what’s crossing the line.

Emerging Trends: Addressing New Forms of Content (e.g., Deepfakes)

Remember how your favorite superhero keeps adapting to new villains? Well, content moderators face their own evolving nemesis: new types of content.

Deepfakes, for instance, are like digital shape-shifting, making it hard to tell real from fake. Content moderation must keep up with these trends to stay one step ahead.

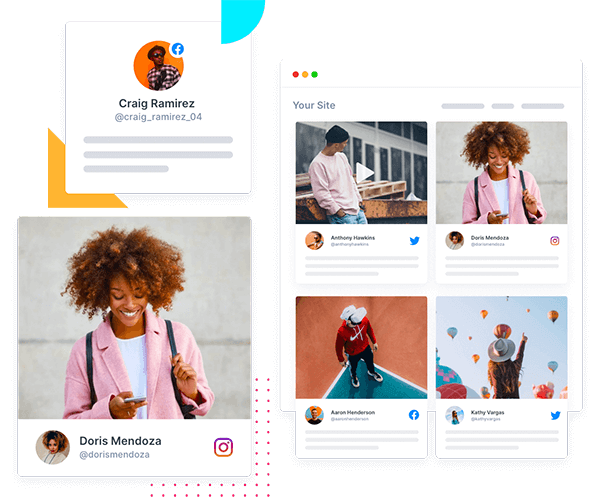

As we dive into the world of content moderation, keep these challenges in mind. They’re the puzzles moderators solve daily to keep our online spaces safe, respectful, and enjoyable. To make this task easier for you, let me introduce you to Tagbox 😎- A tool that not only helps you in content moderation but also in collecting, managing, and showing off your user-generated content on all your marketing touchpoints.

Content Moderation Strategies

Alright, let’s break it down. Imagine content moderation like teamwork – humans and tech joining forces to keep the online world awesome. Here’s the scoop on how they do it:

Human Moderation

It’s like having watchful buddies looking out for you in the digital neighborhood. Check out what they’re up to:

- Importance of Well-Trained and Diverse Moderation Teams:

Different people on the team mean different perspectives. This helps catch tricky stuff that machines might not get. Like having friends from all over the world to keep things balanced.

- Establishing Clear Guidelines and Protocols:

Think of these as rules to make sure everyone plays fair. Moderators follow these rules to decide whether the content is good to go. It’s like having game rules for the online playground.

- Dealing with the Psychological Toll on Moderators:

Watching not-so-nice stuff all day can be tough. Moderators need support to stay cool. They’re the heroes keeping things clean and safe, after all.

AI and Automation

Now, meet the digital sidekicks that help out:

- Role of Machine Learning in Content Detection:

Machines learn patterns, like your pet learning where treats are. They spot things that might not belong by crunching tons of info. Great for handling loads of content.

- Benefits and Limitations of Automated Moderation:

Machines are clever, but they’re not perfect. Sometimes they miss tricky stuff or raise a flag by mistake. They’re like trusty pals who sometimes need a hand.

- The Ongoing Need for Human Oversight:

Humans have secret power machines that lack – understanding of feelings and tricky situations. They double-check what machines find to make sure everything’s spot on.

As you dig into content moderation, remember this team effort. Humans and tech ensure the online world stays friendly, fun, and respectful.

Future of Content Moderation

Ready to gaze into the crystal ball of content moderation? The future holds exciting possibilities that’ll shape how we hang out online. Check out what’s on the horizon:

- Advancements in AI and Technology:

Imagine tech getting even smarter like your pet learning new tricks. AI will become better at spotting tricky content, making the online world safer. We’re talking about super-smart algorithms that can read emotions and understand context like real pros.

- Collaboration Between Platforms, Policymakers, and Users:

Picture a superhero squad coming together. Online platforms, the folks making the rules, and us – the users – will work hand in hand. This means rules that make sense and an online playground everyone helps keep clean.

- User Education and Responsibility in Contributing to a Safe Digital Environment:

Imagine all of us becoming digital superheroes. As users, we’ll learn about what’s cool and not in the online world. Just like learning to recycle, we’ll contribute to a cleaner, kinder digital space by being responsible netizens.

So, get ready for a future where technology gets wiser, platforms and people team up for a better online experience, and everyone chips in to make sure the digital realm stays fun, respectful, and rad for everyone.

Conclusion

Phew, that was quite the exploration! Let’s sum up everything about content moderation and what lies ahead:

Remember those unsung heroes working behind the scenes? Content moderation keeps our digital hangouts clean, safe, and filled with positive vibes. It’s like having invisible hands ensuring everyone’s having a good time.

Change is a constant, and the online world is no exception. Content moderation will keep evolving as new trends and challenges arise. Stay curious and open-minded, as the journey is far from over.

As we sign off, let’s raise a virtual toast to the digital superheroes – the moderators, the tech wizards, and the responsible users – who make the online realm a welcoming haven for diverse voices, creative expression, and respectful interactions.

Here’s to a future where the online world reflects the best of humanity – kindness, understanding, and unity. Keep exploring, keep learning, and keep spreading the digital love!

Embed social feed from Facebook, YouTube, Instagram, Twitter on your website, like a PRO